In Part 1 of the series I went over setting up Starwind

ISCSI SAN and in Part2, configuring ISCSI SAN on the Cluster Nodes and

Installing and testing Multi-site Failover cluster.

Today I will go over setting up File server service, so

let’s get started....

1. Log into Failover Cluster Manager and right click on the

Cluster Name and select Configure a Service or Application

2.

High Availability Wizard begins, click next

3.

Select File Server and click next…

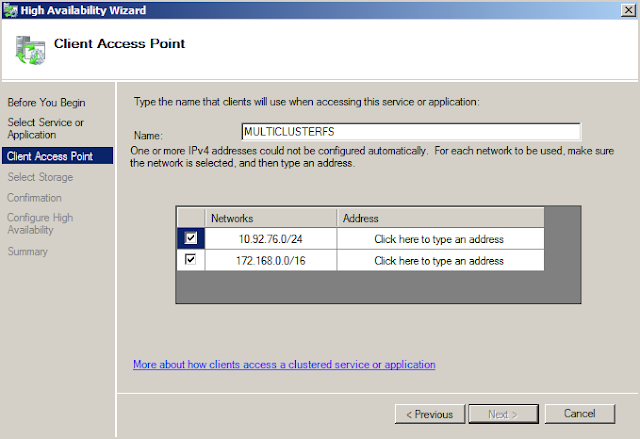

4.

Provide Name and 2 IP Address from each subnet.

Click next…

Note: Before you can click next, make sure you are either

domain administrator or pre populate the Name computer object.

Please refer to this technet article which explains in

depth.

5.

Pick a disk where you want to provision File share and click

next…

6.

Review the settings and click next…

7.

Click Finish…

8.

So we have successfully created the File server service on

the Multi-site cluster.

9.

Testing the failover,

expand Services and application under Cluster Name, right click the File

service Cluster Name and say “Move to node….”

Click “Move …. to Node name”

10.

So we have successfully failed over the resources to other Node.

11. We have a

problem, let go over it.

I am logged in on passive Node VM2008c which is on the 10.92

subnet, see the column which says Shared Folders I don’t see any Shares in

there…. So let’s check the DNS

DNS Server on both

sites:

As the node was moved to other subnet which is 172.168, the

File server Cluster Name (MULTICLUSTERFS) updated the nearest DNS Server, with

IP Address from 10.92.76.22 to 172.168.0.22

As the Node VM2008C still seeing the Old IP (10.92.76.22)

the shares are not visible from Node VM2008C Cluster Manager.

Note: All the client computers on this subnet (10.92.76.0)

will not be able to access any File share resources...

Let see if Cluster Manager can see the shares on Active Node VM2008d... Sure it can and

also clients

Computers on this subnet (172.168.0.0) will be able to

access the File share resources.

Resolution:

So after the DNS replication(depending on your environment) and the Host record TTL

expiration(1200 sec), passive node and clients can see the shares in Failover cluster

Manager...

But this waiting for DNS replication and Host

record TTL expiration defeats the whole purpose of Multi-site clustering..

How to fix:

We have to follow a 2 step process

1.

We will register all the IP’s address in DNS for

File server Cluster Name (MULTICLUSTERFS) .So we do that by executing a

Powershell command on all the Nodes of the cluster and restarting the cluster

service by moving the resources.

What it does is when ever client machine is

looking for File Server Cluster Name Host record, this gives both the registered IP

Address.

Before Powershell execution:

After

Powershell execution:

Get-clusterresource “File

server Cluster Name” | set-clusterparameter RegisterAllProvidersIP 1

2.

The default Host record TTL is 1200 seconds (20

mints). So client will have to wait for 20 mints before it request to update

the Host record on their machines.

We change the value from 1200 to 300

seconds (5 mints recommended) using the Powershell command and failing over the

Nodes.

Before Powershell:

After Powershell:

Get-clusterresource “File

server Cluster Name” | set-clusterparameter HostRecordTTL 300

Ex. Get-clusterresource

“MULTICLUSTERFS” | set-clusterparameter HostRecordTTL 300

Note: Microsoft Technical Evangelist

Symon Perriman has an excellent video which does in to details about the 2 step

process. I highly recommend seeing this video as there are other settings like

cross subnet delay, etc… which needs to be looked into before putting the

cluster to production.

Additional Step:

Reverse lookup for the File share cluster name will fail. So

to fix it, right click on the File share cluster Name, go to properties and

enable the check box “Publish PTR records” ,apply it and failover the cluster

nodes.

Now we have almost configured all the settings, the next

step would be to start provisioning the shares.

I will not be going over these steps, as there are so many

articles out there which go step by step.

This end’s the part 3 of the series and the last part I will

go over setting SQL 2012 on the 2 Node Multi –site cluster.

Recommended Articles:

Ø

Cluster Resource Dependency Expressions blog: http://blogs.msdn.com/b/clustering/archive/2008/01/28/7293705.aspx

Ø

The Microsoft Support Policy for Windows Server

2008 or Windows Server 2008 R2 Failover Clusters: http://support.microsoft.com/kb/943984

Ø

What’s New in Failover Clusters for Windows

Server 2008 R2: http://technet.microsoft.com/en-us/library/dd621586(WS.10).aspx

Ø Failover Cluster

Step-by-Step Guide: Configuring the Quorum in a Failover Cluster: http://technet.microsoft.com/en-us/library/cc770620(WS.10).aspx

Ø

Requirements and Recommendations for a

Multi-site Failover Cluster: http://technet.microsoft.com/en-us/library/dd197575(WS.10).aspx

Ø

The Microsoft Support Policy for Windows Server

2008 or Windows Server 2008 R2 Failover Clusters: http://support.microsoft.com/kb/943984

Hey man,

ReplyDeleteinteresting write up, but how do you solve the problem with the storage only being owned by the master node? You show that you are able to create and failover a file server role, but with two independent storage arrays at different sites you will get errors (as I do now) that basically say that unless the storage is online at the master, it can't be used for the file server role. So, with that, how is it possible to create a file server role that relies on hardware level replication, when windows does not permit you to use the storage defined at each site?

to anyone who comes across this later; my resolution was to create the file server role, then bring it offline fully (including the storage), then bring the nodes for that site holding the role offline, ensure the role was moved over to the remote site, add the other storage from the remote site, create an OR dependency for the role around the two storage options I was trying to protect between, and then bring everything back online.

Delete